The AI Leadership Paradox: Why Smart Leaders Are Getting AI Automation Wrong (Part 1 of 3)

TL;DR

- This is Part 1 of a three-part series exploring the AI Leadership Paradox and how organizations can master it.

- The more sophisticated AI systems become, the more they depend on human judgment for critical scenarios.

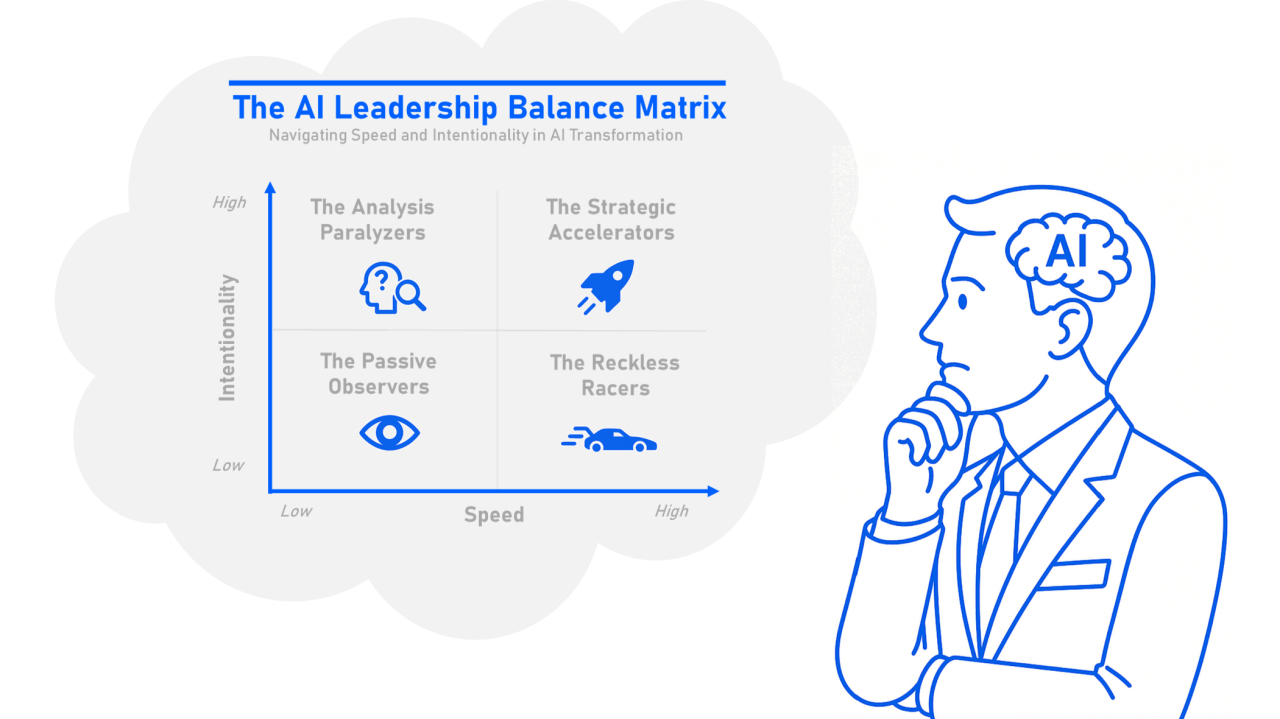

- Organizations typically fall into four archetypes: Reckless Racers, Analysis Paralyzers, Passive Observers, and Strategic Accelerators.

- Success requires balancing speed with intentionality while maintaining essential human competencies.

The Bainbridge Paradox Revisited

In 1983, automation researcher Lisanne Bainbridge uncovered a fundamental irony that haunts technology leaders to this day: "The more advanced a control system is, so the more crucial may be the contribution of the human operator." Writing about industrial automation, Bainbridge observed that designers who tried to eliminate human operators inevitably left them with "an arbitrary collection of tasks" that couldn't be automated: often the most difficult and critical responsibilities, but now without the skills, knowledge, or support systems needed to handle them effectively.

Four decades later, as artificial intelligence transforms every industry, Bainbridge's insight has never been more relevant. The organizations rushing to automate customer service, content creation, and decision-making processes are discovering the same paradox: the more sophisticated their AI systems become, the more they depend on human judgment, oversight, and intervention for the scenarios that matter most. Yet these humans are increasingly disconnected from the operational knowledge and situational awareness needed to step in when AI systems fail, hallucinate, or encounter edge cases.

This is the AI Leadership Paradox of our time: a challenge that extends far beyond simple technology deployment to fundamental questions of human capability, organizational design, and societal responsibility.

The Contemporary Challenge

Through an analysis of 100 notable quotes from more than 30 AI leaders across academia, enterprise, and policy, a consistent pattern emerges: the central tension facing organizations today in balancing speed of AI adoption with thoughtful governance. As Reid Hoffman, co-founder of LinkedIn, noted: "Fast followers who act with purpose, strong governance, and clarity on outcomes will outperform those who wait or race blindly."

This insight reveals what I call the AI Leadership Paradox: the seemingly contradictory need to accelerate AI adoption while simultaneously deepening governance and accountability. Like Bainbridge's industrial operators who found themselves monitoring systems they could no longer control, today's leaders face the challenge of governing AI systems that operate at speeds and scales beyond human comprehension, yet require human judgment for their most consequential decisions.

The Four Archetypes of AI Adoption

Before exploring solutions, it's essential to understand how organizations typically fail to balance speed and intentionality. My analysis reveals four distinct archetypes in AI adoption: patterns that echo Bainbridge's observations about how automation displaces rather than eliminates human challenges.

1. The Reckless Racers: High Speed, Low Intentionality

These organizations rush headlong into AI deployment without adequate frameworks, embodying exactly what Bainbridge warned against: implementing advanced systems while leaving operators with inadequate support for their remaining responsibilities. As Margaret Mitchell, Chief Ethics Scientist at Hugging Face, warns: "There's been a push to launch, launch, launch, even when the product is not necessarily ready, not necessarily high quality... we've seen the public learning this the hard way: there are catastrophic failures."

**The Result**: Short-term productivity gains followed by significant setbacks, compliance issues, and employee backlash when systems fail at critical moments.

2. The Analysis Paralyzers: Low Speed, High Intentionality

At the opposite extreme, some organizations become trapped in endless planning cycles, paralyzed by the very complexity that Bainbridge identified in human-machine systems. They over-analyze risks, wait for AI to "stabilize" before engaging, and create detailed governance structures that never see implementation.

**The Result**: Detailed frameworks that never get implemented while competitors gain insurmountable AI advantages through learning-by-doing.

3. The Passive Observers: Low Speed, Low Intentionality

Perhaps most dangerously, some organizations remain largely disengaged from AI transformation. These organizations face a compounding problem: as the industry evolves around AI, they simultaneously lose AI-capable talent and fall further behind in developing the organizational capabilities needed for effective AI adoption.

**The Result**: Eventual obsolescence as AI-native competitors gain advantages that become impossible to match.

4. The Strategic Accelerators: High Speed, High Intentionality

The winning approach combines rapid experimentation with thoughtful governance, directly addressing Bainbridge's core insight about maintaining human competencies alongside advanced automation. Strategic Accelerators move quickly but with clear frameworks, tie AI initiatives to specific business problems, and maintain strong ethical guardrails while experimenting.

**The Result**: Sustainable competitive advantage through AI that enhances rather than replaces human capabilities.

Principles for Success

Organizations that successfully navigate the AI Leadership Paradox follow several key principles:

1. **Start with Problems, Not Solutions**: Instead of asking "How can we use AI?" they ask "What business problems do we need to solve, and where can AI help?" This keeps human judgment at the center of the equation.

2. **Maintain "Operator Knowledge"**: Like Bainbridge's effective industrial operators, they ensure that the people responsible for AI systems understand not just how to use them, but how they work and where they might fail.

3. **Design for Human Override**: Every AI system includes clear mechanisms for human intervention, and the humans responsible for that intervention maintain the skills and situational awareness needed to use them effectively.

4. **Build Learning Loops**: Instead of deploying AI and walking away, they create continuous feedback mechanisms that improve both the AI systems and human understanding of how to work with them.

The Path Forward

Mastering the AI Leadership Paradox isn't about choosing between speed and caution. It's about building organizational capabilities that allow you to move fast when appropriate and slow down when necessary, while always maintaining the human competencies needed for effective AI governance.

The organizations that will thrive in the AI era aren't those that automate the most tasks or deploy the most models. They're the ones that create symbiotic relationships between human judgment and artificial intelligence, recognizing that the true power of AI lies not in replacing human capabilities, but in augmenting them.

*This is Part 1 of a three-part series on mastering the AI Leadership Paradox. The framework builds on four decades of research into human-automation interaction, updated for the age of artificial intelligence.*

*Coming Next: "The Three Horizons of AI Transformation: A Roadmap for Strategic Accelerators"*